Intro into Jobs

Starting with RoadRunner >= 2.4, a queuing system (aka "jobs") is available. This plugin allows you to move arbitrary "heavy" code into separate tasks to execute them asynchronously in an external worker, which will be referred to as "consumer" in this documentation.

The RoadRunner PHP library provides both API implementations: The client one, which allows you to dispatch tasks, and the server one, which provides the consumer who processes the tasks.

Configuration

After installing all the required dependencies, you need to configure this plugin. To enable it add jobs section to your configuration.

Here is an example of configuration:

The

rpcsection is responsible for client settings. It is at this address that we will connect, dispatching tasks to the queue.The

serversection is responsible for configuring the server. Previously, we have already met with its description when setting up the PHP Worker.And finally, the

jobssection is responsible for the work of the queues themselves. It contains information on how the RoadRunner should work with connections to drivers, what can be handled by the consumer, and other queue-specific settings.

Common Configuration

Let's now focus on the common settings of the queue server. In full, it may look like this:

Above is a complete list of all possible common Jobs settings. Let's now figure out what they are responsible for.

num_pollers: The number of threads that are simultaneously reading from the priority queue and send payloads to the workers. There is no optimal number, it depends heavily on the performance of the PHP worker. For example, echo workers can process over 300k jobs per second within 64 pollers (on a 32 core CPU).num_pollersshould not be less than the number of workers to properly load all of them with the jobs.timeout: The internal Golang context timeouts (in seconds). For example, if the connection was disconnected or your push was in the middle of a redial state with a timeout of 10 minutes (but our timeout is e.g. 1 minute), or the queue is full. If the timeout is exceeded, your call will be rejected with an error. Default: 60 (seconds).pipeline_size: The binary heaps priority queue (PQ) settings. The priority queue stores jobs in order of priority. The priority can be set for the job or inherited by the pipeline. When worker performance is poor, PQ will accumulate jobs untilpipeline_sizeis reached. After that, PQ is then blocked until the workers have processed all the jobs in it. Lower number means higher priority.

Blocked PQ means that you can push the job into the driver, but RoadRunner will not read that job until PQ is empty. If RoadRunner is running with jobs in the PQ, they won't be lost because jobs are not removed from the driver's driver queue until after Ack.

pool: All settings in this section are similar to the worker pool settings described on the configuration page.consume: Contains an array of the names of all queues specified in the"pipelines"section, which should be processed by the concierge specified in the global"server"section (see the PHP worker's settings).pipelines: This section contains a list of all queues created in the RoadRunner. The key is a unique queue identifier, and the value is an object of the driver-specific configuration (we will talk about this later).

PHP Client (Producer)

Installation

To get access from the PHP code, you should put the corresponding dependency using the Composer.

Now that we have the server configured, we can start writing our first code to send the task to the queue. But before we do that, we need to connect to our server. And to do that, it is enough to create a Jobs instance.

In this case we did not specify any connection settings. And this is really not necessary if this code is executed in a RoadRunner environment. However, in case you need to connect from a third-party application (e.g. a CLI command), you need to specify the settings explicitly.

To interact with the RoadRunner jobs plugin, you will need to have the RPC defined in the rpc configuration section. You can refer to the documentation page here to learn more about the configuration and installation.

When the connection is created, and the availability of the functionality is checked, we can connect to the queue we need using connect() method.

Task Creation

Before submitting a task to the queue, you should create this task. To create a task, it is enough to call the corresponding create() method.

The name of the task does not have to be a class. Here we are using SendEmailTask just for convenience.

Also, this method takes an additional second argument with additional data to complete this task.

The payload must be any string. You need to serialize the data yourself. The RoadRunner does not provide any serialization tools.

In addition, the method takes an additional third argument with Spiral\RoadRunner\Jobs\OptionsInterface where you can pass object with predefined options.

You can also redefine options for created task.

Task creation for Kafka driver

Please note, a queue with Kafka driver requires a task with specified topic. In this case you have to use Spiral\RoadRunner\Jobs\KafkaOptionsInterface, because it has all required methods for working with Kafka driver. Connect to queue using Spiral\RoadRunner\Jobs\KafkaOptionsInterface. To redefine this options for a particular message, simply pass another Spiral\RoadRunner\Jobs\KafkaOptionsInterface implementation as a third parameter of the create method.

As noted above, we use interfaces everywhere to configure task options, and developers can create their own implementations of those interfaces.

Task Dispatching

And to send tasks to the queue, we can use different methods: dispatch() and dispatchMany(). The difference between these two implementations is that the first one sends a task to the queue, returning a dispatched task object, while the second one dispatches multiple tasks, returning an array. Moreover, the second method provides one-time delivery of all tasks in the array, as opposed to sending each task separately.

Task Immediately Dispatching

In the case that you do not want to create a new task and then immediately dispatch it, you can simplify the work by using the push method. However, this functionality has a number of limitations. In case of creating a new task:

You can flexibly configure additional task capabilities using a convenient fluent interface.

You can prepare a common task for several others and use it as a basis to create several alternative tasks.

You can create several different tasks and collect them into one collection and send them to the queue at once (using the so-called batching).

In the case of immediate dispatch, you will have access to only the basic features: The push() method accepts two required arguments. The first argument is the name of the task to be executed. The second argument is the payload data for the task. In addition, the method takes an additional third argument with Spiral\RoadRunner\Jobs\OptionsInterface where you can pass object with predefined options. Moreover, this method is designed to send only one task.

Task Headers

In addition to the data itself, we can send additional metadata that is not related to the payload of the task, that is, headers. In them, we can pass any additional information, for example: Encoding of messages, their format, the server's IP address, the user's token or session id, etc.

Headers can only contain string values and are not serialized in any way during transmission, so be careful when specifying them.

In the case to add a new header to the task, you can use methods similar to PSR-7.

That is:

withHeader(string, iterable<string>|string): self- Return an instance with the provided value replacing the specified header.withAddedHeader(string, iterable<string>|string): self- Return an instance with the specified header appended with the given value.withoutHeader(string): self- Return an instance without the specified header.

Task Delayed Dispatching

If you want to specify that a job should not be immediately available for processing by a jobs worker, you can use the delayed job option. For example, let's specify that a job shouldn't be available for processing until 42 minutes after it has been dispatched:

Consumer Usage

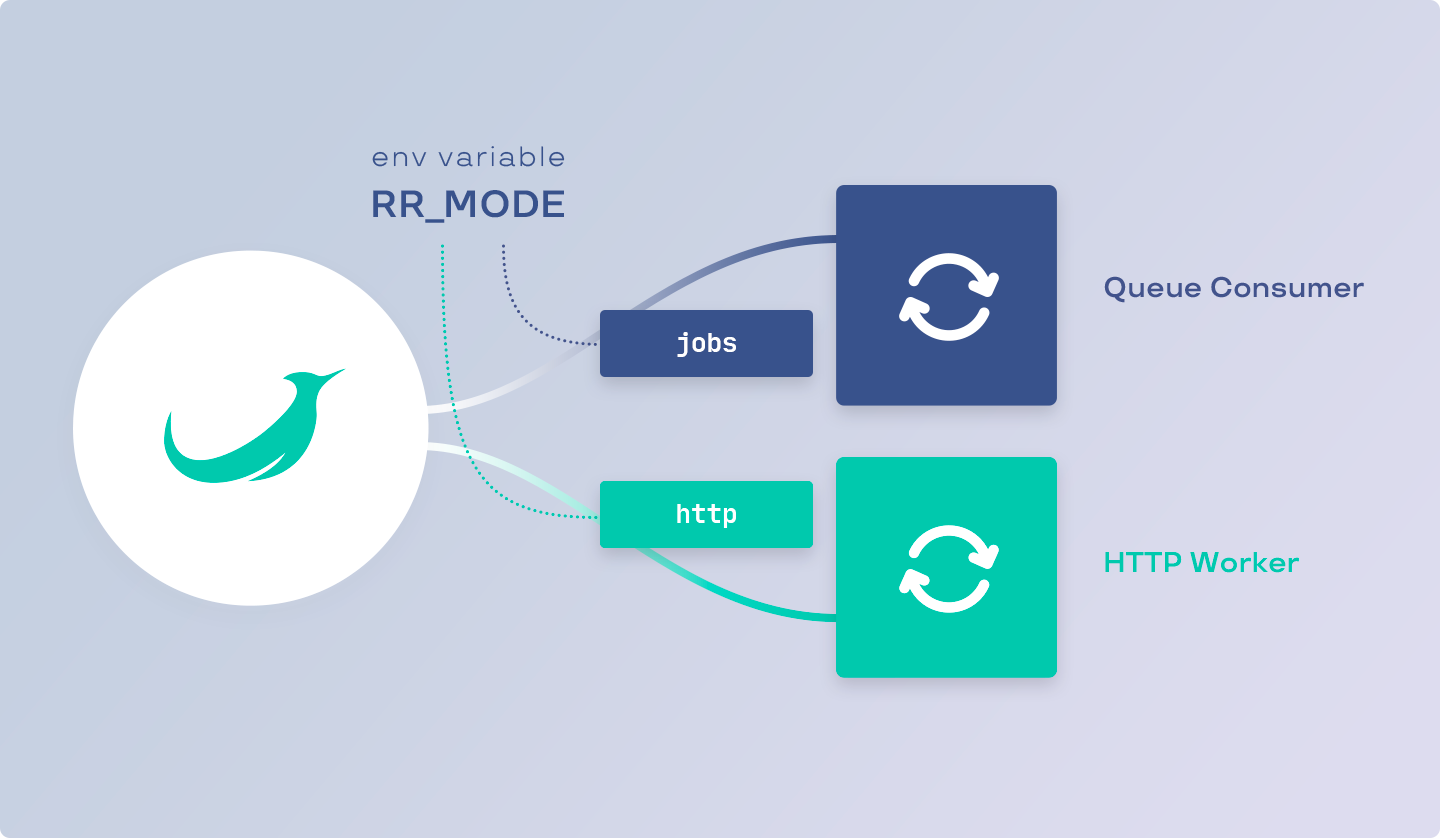

You probably already noticed that when setting up a jobs consumer, the "server" configuration section is used in which a PHP file-handler is defined. Exactly the same one we used earlier to write a HTTP Worker.

Does this mean that if we want to use the Jobs Worker, then we can no longer use the HTTP Worker? No it does not!

During the launch of the RoadRunner, it spawns several workers defined in the "server" config section (by default, the number of workers is equal to the number of CPU cores). At the same time, during the spawn of the workers, it transmits in advance to each of them information about the mode in which this worker will be used. The information about the mode itself is contained in the environment variable RR_ENV and for the HTTP worker the value will correspond to the "http", and for the Jobs worker the value of "jobs" will be stored there.

There are several ways to check the operating mode from the code:

By getting the value of the env variable.

Or using the appropriate API method (from the

spiral/roadrunner-workerpackage).

The second choice may be more preferable in cases where you need to change the RoadRunner's mode, for example, in tests.

After we are convinced of the specialization of the worker, we can write the corresponding code for processing tasks. To get information about the available task in the worker, use the $consumer->waitTask(): ReceivedTaskInterface method.

After you receive the task from the queue, you can start processing it in accordance with the requirements. Don't worry about how much memory or time this execution takes - the RoadRunner takes over the tasks of managing and distributing tasks among the workers.

After you have processed the incoming task, you can execute the complete(): void method. After that, you tell the RoadRunner that you are ready to handle the next task.

We got acquainted with the possibilities of receiving and processing tasks, but we do not yet know what the received task is. Let's see what data it contains.

Task Failing

In some cases, an error may occur during task processing. In this case, you should use the fail() method, informing the RoadRunner about it. The method takes two arguments. The first argument is required and expects any string or string-like (instance of Stringable, for example any exception) value with an error message. The second is optional and tells the server to restart this task.

In the case that the next time you restart the task, you should update the headers, you can use the appropriate method by adding or changing the headers of the received task.

In addition, you can re-specify the task execution delay. For example, in the code above, you may have noticed the use of a custom header "retry-delay", the value of which doubled after each restart, so this value can be used to specify the delay in the next task execution.

Received Task ID

Each task in the queue has a unique identifier. This allows you to unambiguously identify the task among all existing tasks in all queues, no matter what name it was received from.

In addition, it is worth paying attention to the fact that the identifier is not a sequential number that increases indefinitely. It means that there is still a chance of an identifier collision, but it is about 1/2.71 quintillion. Even if you send 1 billion tasks per second, it will take you about 85 years for an ID collision to occur.

In the case that you want to store this identifier in the database, it is recommended to use a binary representation (16 bytes long if your DB requires blob sizes).

Received Task Queue

Since a worker can process several different queues at once, you may need to somehow determine from which queue the task came. To get the name of the queue, use the getQueue(): string method.

For example, you can select different task handlers based on different types of queues.

Task auto acknowledge

RoadRunner version v2.10.0+ supports an auto acknowledge task option. You might use this option to acknowledge a task right after RR receive it from the queue. You can use this option for the non-important tasks which can fail or break the worker.

To use this option you may update the Options:

Or manage that manually per every Task:

Received Task Name

The task name is some identifier associated with a specific type of task. For example, it may contain the name of the task class so that in the future we can create an object of this task by passing the required data there. To get the name of the task, use the getName(): string method.

Thus, we can implement the creation of a specific task with certain data for this task.

Received Task Payload

Each task contains a set of arbitrary user data to be processed within the task. To obtain this data, you can use one of the available methods:

getPayload

Also, you can get payload data in string format using the getPayload method. This method may be useful to you in cases of transferring all data to the DTO.

Received Task Headers

In the case that you need to get any additional information that is not related to the task, then for this you should use the functionality of headers.

For example, headers can convey information about the serializer, encoding, or other metadata.

The interface for receiving headers is completely similar to PSR-7, so methods are available to you:

getHeaders(): array<string, array<string, string>>- Retrieves all task header values.hasHeader(string): bool- Checks if a header exists by the given name.getHeader(string): array<string, string>- Retrieves a message header value by the given name.getHeaderLine(string): string- Retrieves a comma-separated string of the values for a single header by the given name.

We got acquainted with the data and capabilities that we have in the consumer. Let's now get down to the basics - sending these messages.

Advanced Functionality

In addition to the main functionality of queues for sending and processing in API has additional functionality that is not directly related to these tasks. After we have examined the main functionality, it's time to disassemble the advanced features.

Creating A New Queue

In the very first chapter, we got acquainted with the queue settings and drivers for them. In approximately the same way, we can do almost the same thing with the help of the PHP code using create() method through Jobs instance.

To create a new queue, the following types of DTO are available to you:

Spiral\RoadRunner\Jobs\Queue\AMQPCreateInfofor AMQP queues.Spiral\RoadRunner\Jobs\Queue\BeanstalkCreateInfofor Beanstalk queues.Spiral\RoadRunner\Jobs\Queue\MemoryCreateInfofor in-memory queues.Spiral\RoadRunner\Jobs\Queue\SQSCreateInfofor SQS queues.Spiral\RoadRunner\Jobs\Queue\KafkaCreateInfofor Kafka queues.Spiral\RoadRunner\Jobs\Queue\BoltdbCreateInfofor Boltdb queues.

Such a DTO with the appropriate settings should be passed to the create() method to create the corresponding queue:

Getting A List Of Queues

In that case, to get a list of all available queues, you just need to use the standard functionality of the foreach operator. Each element of this collection will correspond to a specific queue registered in the RoadRunner. And to simply get the number of all available queues, you can pass a Job object to the count() function.

Pausing A Queue

In addition to the ability to create new queues, there may be times when a queue needs to be suspended for processing. Such cases can arise, for example, in the case of deploying a new application, when the processing of tasks should be suspended during the deployment of new application code.

In this case, the code will be pretty simple. It is enough to call the pause() method, passing the names of the queues there. In order to start the work of queues further (unpause), you need to call a similar resume() method.

RPC Interface

All communication between PHP and GO made by the RPC calls with protobuf payloads. You can find versioned proto-payloads here: Proto.

Push(in *jobsv1.PushRequest, out *jobsv1.Empty) error- The arguments: the first argument is aPushRequest, which contains one field of theJobbeing sent to the queue; the second argument isEmpty, which means that the function does not return a result (returns nothing). The error returned if the request fails.PushBatch(in *jobsv1.PushBatchRequest, out *jobsv1.Empty) error- The arguments: the first argument is aPushBatchRequest, which contains one repeated (list) field of theJobbeing sent to the queue; the second argument isEmpty, which means that the function does not return a result. The error returned if the request fails.Pause(in *jobsv1.Pipelines, out *jobsv1.Empty) error- The arguments: the first argument is aPipelines, which contains one repeated (list) field with thestringnames of the queues to be paused; the second argument isEmpty, which means that the function does not return a result. The error returned if the request fails.Resume(in *jobsv1.Pipelines, out *jobsv1.Empty) error- The arguments: the first argument is aPipelines, which contains one repeated (list) field with thestringnames of the queues to be resumed; the second argument isEmpty, which means that the function does not return a result. The error returned if the request fails.List(in *jobsv1.Empty, out *jobsv1.Pipelines) error- The arguments: the first argument is anEmpty, meaning that the function does not accept anything (from the point of view of the PHP API, an empty string should be passed); the second argument isPipelines, which contains one repeated (list) field with thestringnames of the all available queues. The error returned if the request fails.Declare(in *jobsv1.DeclareRequest, out *jobsv1.Empty) error- The arguments: the first argument is anDeclareRequest, which contains onemap<string, string>pipeline field of queue configuration; the second argument isEmpty, which means that the function does not return a result. The error returned if the request fails.Stat(in *jobsv1beta.Empty, out *jobsv1beta.Stats) error- The arguments: the first argument is anEmpty, meaning that the function does not accept anything (from the point of view of the PHP API, an empty string should be passed); the second argument isStats, which contains one repeated (list) field namedStatsof typeStat. The error returned if the request fails.

From the PHP point of view, such requests (List for example) are as follows:

Last updated